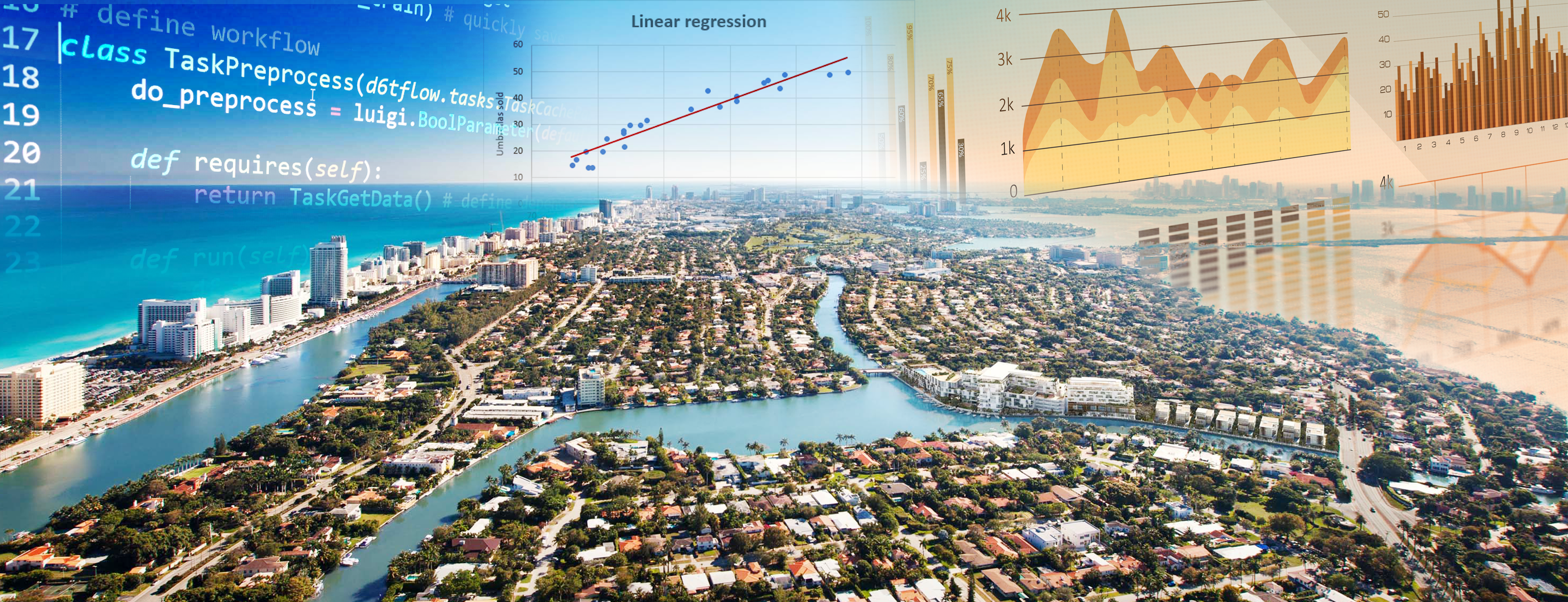

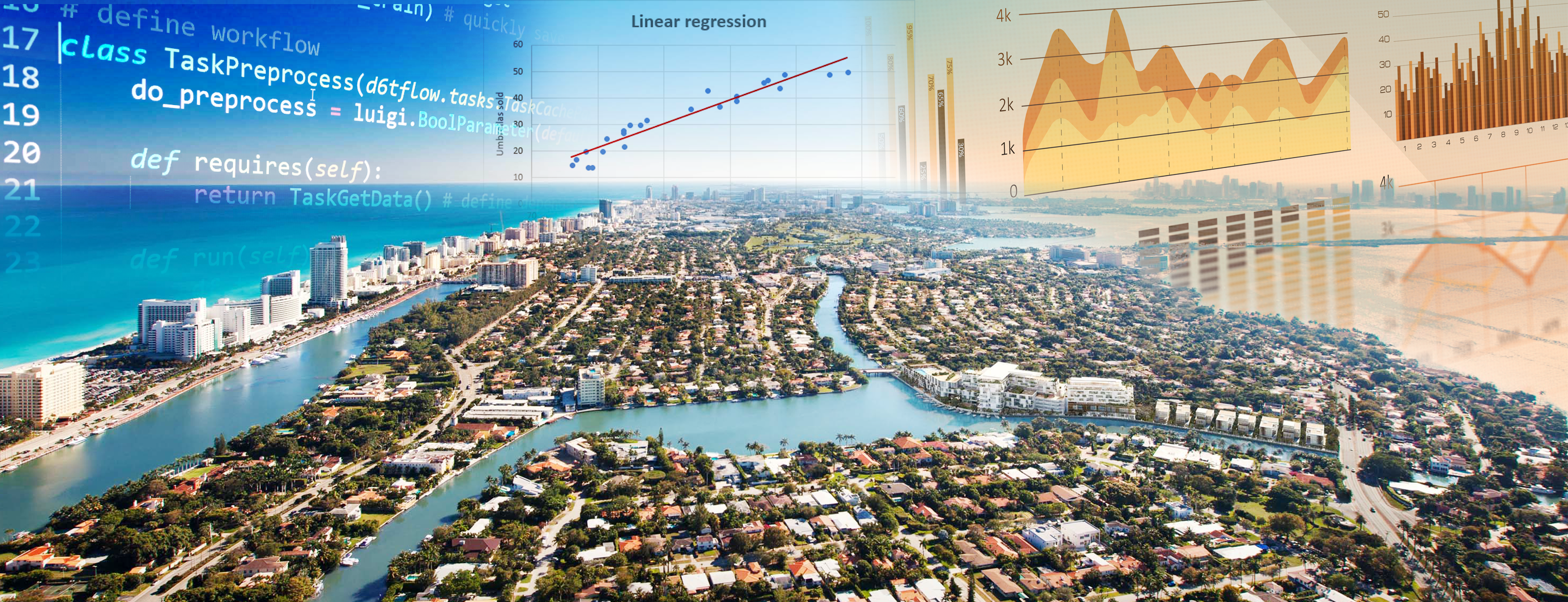

To perform a micro analysis of the real state market, we draw data from different sources. We used the property appraiser data from Miami-Dade County Open Data Hub (https://gis-mdc.opendata.arcgis.com/datasets/property-point-view), a dataset with gun violence incidents reported between 2015 and 2018 (https://www.kaggle.com/ericking310/us-gun-violence) and a dataset of neighborhood facilities around each property that we generated using ArcGIS (https://developers.arcgis.com/python/) geocoding services.

We focused on the properties sold in 2019. After preprocessing the data, we ended up with a dataset of 4595 properties. For each property, we calculated a gun violence score accounting for the gun violence incidents that occurred within 1 mile of the property. Also, we leveraged ArcGIS geocoding APIs to find the number of groceries, coffee shops, educational centers among other facilities in the neighborhood of every property (within 1 mile). Then we enhanced the properties dataset with the gun violence score and several neighborhood facilities columns and used that for our analysis.

We split the dataset into two sets, 80% for training and the remaining for testing. Then we first implemented a linear regression model, fit it to our data and validated it on the testing set. The R^2 score of the model was 70% and the mean absolute error was 34114, meaning that the predicted prices deviate from the actual prices in our test set by 34114 dollars on average.

In an effort to improve our predictions, we also implemented a Gradient Boosting Regressor, which yielded slightly better perditions with 75% R^2 score and 29822 mean absolute error. By inspecting the feature importances, we observed that the building size in square foot had the highest weight, followed by the building’s coordinates and the gun violence score, reinforcing the intuition that property size, location and safety are key features that drive property prices.

It was interesting, although not surprising, to observe the positive impact of city amenities and good schools in the prices while several crimes and bad schools in the area highly correlated with much lower prices.

To do such analysis on our own we assigned a weight to different types of crimes from small incidents involving firearms, injuries, and ultimately kills caused by gun violence.

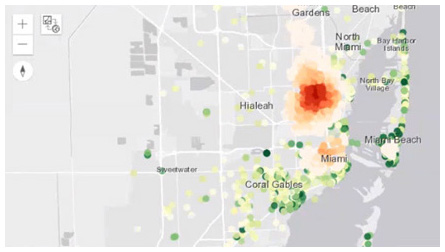

The screenshot on the right shows in red the properties that are affected the most by gun violence.

back to top

|